One of several papers on management

Bias and how to train to overcome it

Written by John Berry on 26th January 2021. Revised 26th February 2021.

7 min read

All managers would hope that they make rational decisions. All would hope that they will conduct their day-to-day activities considering only assessments, ideas and plans that are developed as a result of critical thinking, duly supported by evidence.

All managers would hope that they make rational decisions. All would hope that they will conduct their day-to-day activities considering only assessments, ideas and plans that are developed as a result of critical thinking, duly supported by evidence.

The reality is a long way from this. Most managers make decisions using a mix of gut feel and repetition from experience, based on evidence from hearsay. The result is that managers’ decisions are open to the effects of bias.

Bias is best explained by comparing real decision-making with ideal decision making. Ideal decision making would always return the same result for a given scenario, independent of the person making the decision. So, under ideal decision-making, a thousand managers randomly distributed across the globe, would all make the same decision, given the same scenario and information. Those managers would be a mix of women and men, young and old, white and BAME, engineers and sociologists, and all variants between and many more besides. They would be completely objective, making their decision using the same psychological processing engine considering evidence alone. In reality, each decision-maker brings their different upbringing, education and experience to the task, modified in turn by their differing intelligence, personalities, identities, attitudes, beliefs and values. Their cognitive assessments would not all be the same. The result would be a thousand different decisions.

Some of those decisions might coalesce towards various normative forms. Culture might account for some of this. All managers in Japan, for example, might think similarly about a given scenario and make similar decisions. And all managers who had a UK public schooling might likewise have similar ideas, resulting in similar decisions. But there would be as many differences as similarities. Bias is an idiosyncratic effect and must be understood, assessed and countered personally.

Bias, or rather, being biased, is the termed we use to describe the fact that everyone interprets and processes information differently, and hence everyone comes to different conclusions about each scenario faced. In principle, bias doesn’t matter. One could just conclude that the differing conclusions and outcomes are part of the so-called rich tapestry of life. But in reality, it does matter because often the result of this flawed decision-making is that people get harmed.

As a result of bias, a manager might discriminate between a white person and a BAME person. The white person might get promotion, while the BAME person gets poor quality work. As a result of bias, employees might bully a gay man, to the point where he commits suicide. The likelihood and effect of hurtful outcomes varies.

Bias is a serious societal problem.

Bias is individual, and hence it must be dealt with individually. No quick introduction of process or new technology will counter bias. It might be useful to recruit ‘blind’, ignoring names, genders and ages on CVs, but in the end such tactics only mask the problem, rather than overcome it. No technology or process can root out bias. Indeed, on occasion, the presence of technology to control bias itself introduces bias! Fundamentally, managers must change their worldview. They must unlearn their biased ways and learn to interpret and process data objectively. And that’s hugely difficult.

Bias training, leading ultimately to objective decision-making, is best done in a three-parts.

First, the manager must understand how bias works and the huge range of resulting effects from it. We discuss this and illustrate this scope below in a diagram. This understanding will take time to achieve, requiring significant reading and reflection. During this period, the manager must form a view about what objective decision-making is for them and how they will tell an objective decision from a biased one.

Second, the manager must practice mindfulness. They must stop themselves at the start of every possible decision and ask, ‘how can I be objective about the data I’m presented with and the way that I’m going to process it’. And they must trap their own bias by writing down where they could go wrong, and the undesirable effects bias might cause.

Third, the manager must introduce criteria, process and technology. Objective decision-making requires criteria. Criteria are principles or standards against which something can be judged. Criteria must be valid. Validity demands that each criterion helps predict a desirable business outcome, such as performance, quality or safety. We might, for example, say that someone with high abstract reasoning will perform well in a scientific job, and we’d use abstract reasoning as one of our decision-making criteria in personnel selection and development. That claim is well supported by evidence from publicly available academic research. Whether an applicant is black or white, male or female, or gay or straight, is irrelevant when it comes to personal performance in that job. To introduce bias would corrupt the decision by introducing a non-valid criterion. In our example, it only matters that they have high abstract reasoning.

Managers must ask themselves every minute of every day what criteria they will use to make the decision before them. Managers must build libraries of criteria for every decision they might make – and it’s here that process and technology helps. There are many, many tools available. We discuss the various tools, processes and technologies in our book – indeed the entire book focusses on objective, evidence-based decision making, using valid criteria.

But in the end, all decisions will still be biased, even if only slightly. With personal development and the use of tools, however, the decisions will be less outlandish, less extreme and more objective and managers and their staff will be more aware of the risks they run as a result of bias.

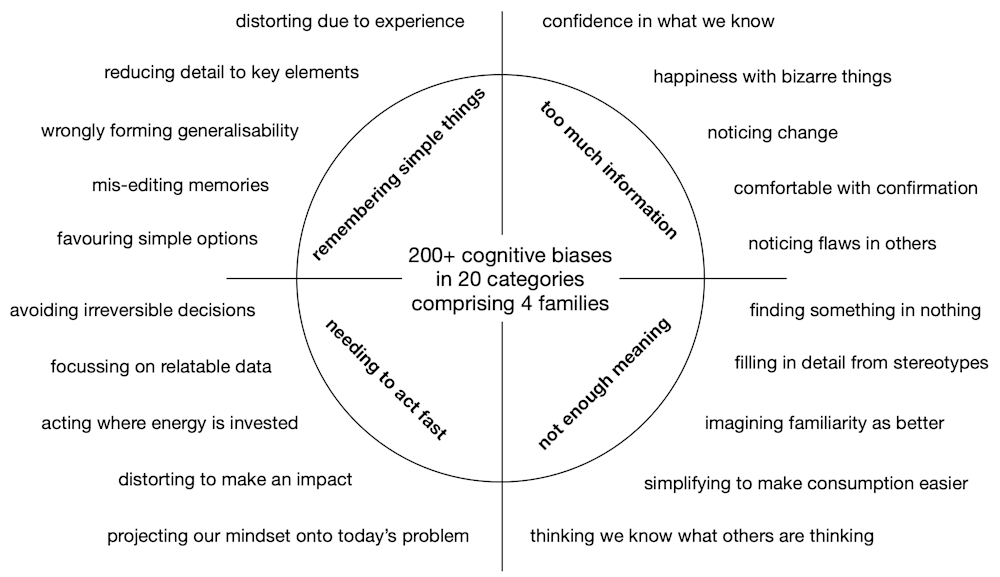

The problem with bias and the countering of biases is that there are simply too many identifiable biases to remember. Benson and Manoogian have developed the Cognitive Bias Codex categorising over 200 biases into 20 categories, and in turn into four families. But even that doesn’t help.

The image here shows those our simplification of those biases. You can use right mouse click and 'Open image in new tab' to see the image full size.

Under this example category, there are nine individually identifiable biases, like Zero Sum Bias. Under zero sum bias, a person would assume that for someone to gain, someone else must lose. The person’s worldview expressed as a bias prevents them from accepting that both parties could gain (or lose) as a result of a decision. Such a worldview drives their decisions towards win-lose outcomes rather than striving for win-win. Each category covers between three and 21 discrete biases. Space precludes us describing all 200 plus.

It’s hugely difficult to select biases that might be typical of managers. We consider the following four to be useful as examples.

• Frequency Illusion: once an event is noticed, it seems to repeat again and again. Once it’s noticed that someone is off sick, they seem to be off sick again and again. In reality they’re not off sick often – it just seems that way.

• Confirmation Bias: managers tend to interpret what they see in a way that supports their prior belief. Having had a really great female marketing manager in a previous company, the belief is that no male will be as good in that role.

• Stereotyping: managers tend to bundle people into types and form generalised opinions based on superficial observation. The assumption is that because one engineer is judgemental (jumping quickly to conclusions), all engineers will be likewise.

• Primacy effect: people tend to remember things cited at the beginning of a list of items better than things lost in the middle. This applies to many written and verbal lists in documents like CVs and disciplinary investigation reports.

It is futile for a manager to try to remember all the forms of bias, and then to scan the list at every decision. As we note above, it’s better to understand how to make a rational decision in the first place using appropriate criteria, based on suitable evidence. But it is important that all managers read about all 200 plus, in order to recognise the scope and depth of bias in their day to day lives, and its possible effects.